How Racial Bias in Tech Has Developed the “New Jim Code”

In recent years, machine learning and artificial intelligence have gained popularity in artistic analysis and historical reconstruction. Although this technology is often touted as a means of allegedly achieving true objectivity, it is not free from the subjective perspectives of their creators. Whether aiming to find beauty, trustworthiness, or historical accuracy, as scholars and critics have incorporated seemingly impartial digital and mathematical approaches, they have woven their biases into the fabric of their work. The notion that beauty can be defined by a mathematical formula goes back to the ancient Greek idea of the golden ratio. Today, computer scientists tout machine learning as the answer to assessing supposedly objective standards of beauty. Machine learning is a field of AI (artificial intelligence) which uses mathematical algorithms to teach machines to analyze large amounts of data. However, entrusting beauty to the eye of the robot beholder has surfaced some problems.

In 2016, the first beauty contest to be judged by artificial intelligence was launched online. The creators of Beauty.AI soon discovered that the robot jury that evaluated over 6,000 entries “did not like people with dark skin.” Only one of the 44 winners had dark skin. Beauty.AI’s developer, Youth Laboratories (an AI lab based in Russia and Hong Kong), acknowledged the racism inherent in what they term their “deep learning” algorithms. After a second iteration of the beauty contest, they launched an initiative called Diversity.AI. This new effort promised to create a discussion forum for more inclusive code writing and for developing freely available datasets for download that would allow developers to train machines using “minority data sets.”

The prejudices of the programmers and datasets used to create Beauty.AI and many other AI projects are explored in a new book, Race After Technology, by Ruha Benjamin. Benjamin is an associate professor of African American Studies at Princeton University and founder of the Ida B. Wells Just Data Lab. Race After Technology demonstrates that algorithms often serve to amplify hierarchies and replicate social divisions by ignoring their existence. In this work Benjamin echoes the work of Columbia Law Professor and “predictive policing” expert Bernard E. Harcourt. Harcourt warns us about the dangers of a populace conditioned to regard AI and machine learning as unbiased. In an article for the Guardian from 2016, Harcourt states, “The [Beauty.AI] case is a reminder that humans are really doing the thinking, even when it’s couched as algorithms and we think it’s neutral and scientific.” Benjamin, Harcourt, and other academics such as Safiya Umoja Noble have continued to sound the alarm over popular faith in the impartiality of AI. Noble argues that AI is used to support “technological redlining” which involves racial profiling within the algorithms used by everyone from Google to law enforcement. Even Twitter’s new image cropping algorithm privileges lighter faces over darker ones. In Race After Technology, Benjamin refers to these “subtle but no less hostile form[s] of systemic bias” as the “New Jim Code.”

Examples of the “New Jim Code” can also be seen in more academic publications. Led by Nicolas Baumard, French cognitive scientists argued that they had tracked “historical changes in trustworthiness using machine learning analyses of facial cues in paintings.” Without including any art historians or artists, the team designed an algorithm to generate “trustworthiness evaluations.” They based their work on the facial actions (e.g. smiles and eyebrow movement) depicted in European portraits from two databases of paintings, the National Portrait Gallery (1,962 English portraits from 1505–2016) and the Web Gallery of Art (4,106 portraits from 19 Western European countries, 1360–1918). The researchers claimed their “results show that trustworthiness in portraits increased over the period 1500–2000 paralleling the decline of interpersonal violence and the rise of democratic values observed in Western Europe.” Additionally, they allege an “association between GDP and the rise of trustworthiness.”

Algorithmic bias is also implicit in online image search engines that lead millions of users to find digitized art and photographs every day. A simple comparison of Google searches on “black girls” and “white girls” reveals how racism and sexism affect search results. In Safiya Umoja Noble’s Algorithms of Oppression: How Search Engines Reinforce Racism, she discusses how Black women and girls are misrepresented with derogatory and hypersexualized images.

Even when designed primarily for amusement, tech products reveal human bias. For instance, Google Arts & Culture platform created a popular app that provides exhibits and museum collections. It includes a selfie-matching feature that pairs the uploaded selfie image with a similar artwork. Although the aim of the app is to create a fun way to explore art, many people of color have reported that their images were matched with images depicting racist stereotypes, including exoticized images or people acting in subservient roles.

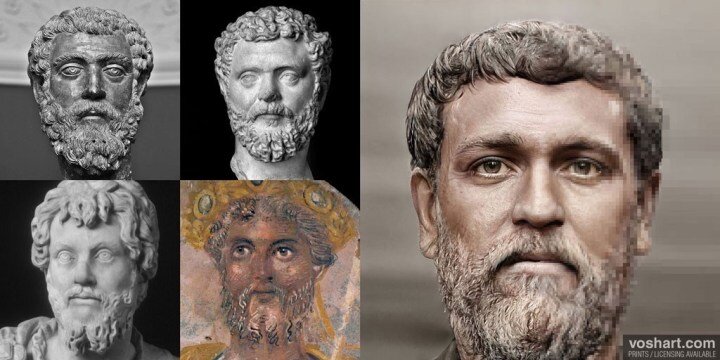

Artists have also touted the benefits of AI and machine learning for historical reconstructions. Instead of baking, gardening, or TikToking during quarantine, designer Daniel Voshart created color portraits of Roman Emperors. However, Voshart’s work and similar efforts like the History in 3D project, are not harmless diversions. Instead, they are potentially dangerous projects that could lend credence to the long-discredited notion of a white ancient Mediterranean world.

Voshart is a Toronto-based cinematographer and virtual reality (VR) specialist. He created images of 54 emperors from Augustus through Numerian during the period of the Principate (27 BCE–235 CE) which has now garnered widespread attention across the internet on media outlets such as the Smithsonian and Mashable. Among the 54 images is one portrait of a woman, Lupia Severina, who may have reigned on her own after the death of her husband Aurelian.

Taking base images of busts and then using Photoshop and Artbreeder, a machine learning, photo-mixing platform, Voshart generated what he calls “photoreal” images. In part, his project has gained attention as the white marble sculptures one typically sees have been transformed into stunningly life-like portraits imitating the original polychromy. For instance, Augustus is reconstructed with beige skin, gray eyes, and sandy blond hair. Each portrait is unique. In his Medium post, Voshart asserts that he used historical texts, coinage, and sculpture in crafting his images with attention to age, hair, eyes, and ethnicity. He also admits that his project involves artistic interpretation and that he crafts composite images when busts were not available. Voshart explains, “My goal was not to romanticize emperors or make them seem heroic.” Still, because these images are so seemingly realistic, they could easily be mistaken for accurate reconstructions, especially when neatly assembled as a poster and made available for purchase.

Voshart’s use of machine learning provides his reconstructions with a veil of scientific merit. Yet, as he told Smithsonian Magazine, “These are all, in the end, … my artistic interpretation where I am forced to make decisions about skin tone where none [are] available.” This use of technology may seem innocuous, but human bias is embedded in it. One need only to look back at the photography color correction guides called “Shirley cards,” which were calibrated to the pale skin of a Kodak employee named Shirley, to see examples of biased color standards.

Although recent headlines regarding Voshart’s work tout the use of machine learning to reconstruct imperial portraits, his work is still the result of his artistic choices. While ancient artists painted marble sculptures with rich, vibrant colors, we have very few busts that have any remaining paint. Even so, the colors used for skin tone would still be artistic interpretations rather than historically accurate representations.

Due to the choices Voshart made, his work does not display the ethnic diversity of the ancient world. More importantly, it also only addresses the emperors of the Roman Empire rather than the general populace of the ancient Mediterranean. Certainly, one can grant some artistic license to such interpretations. Yet, while Voshart’s images include a range of hair texture, eye color, and skin color, none of the portraits initially included anyone with brown skin or tight curls. Especially given his choice of skin tones, Voshart has constructed predominantly portraits with lighter skin tones that do not reflect the multi-ethnic ancient Mediterranean world. The initial single exception is the darker skin tone of Voshart’s rendering of the emperor Septimius Severus.

In comments to Hyperallergic, Voshart claimed that he attempted to darken the skin of Septimius Severus to match a well known Severan painted tondo but had difficulties with Artbreeder and the neural network. When asked about his use of idealized portraits and sculpture, the designer notes: “I suppose, coming from a film background I’m a bit less concerned about making historical depictions. A historian such as yourself [i.e. Sarah Bond] — I’m guessing — would be too concerned about every little detail that you would never pursue such a project.” Voshart is responsible for the selection and manipulation of the source material, and his use of technology is not neutral.

In responding to questions from Hyperallergic and responses from classicists on Twitter, Voshart has altered some of the portraits in part to offer a slightly wider range of skin tones. Such alterations only serve to illustrate that these portraits are not realistic but primarily artistic. Using the same textual sources, artistic images, and technology, different individuals could create portraits that were unique and markedly different than Voshart’s. And yet we have already seen readers and Twitter users using his artistic reconstructions as if they are actual photographs or accurate polychromatic reconstructions like those done by polychromy scientists such as Mark Abbe or Vinzenz Brinkmann.

To be sure, Voshart’s Roman emperors may spur interest in ancient history, but it is important to emphasize that color reconstructions carry their own caveats. For instance, the new History in 3D project touts its own polychromatic reconstructions of white-appearing emperors and hashtags these reconstructions with #heritage. Comparing reconstructive differences would be useful in pedagogical environments. Allowing students to use the technology to construct images allows them to better understand the diversity of the ancient world as well as the limitations of such technology. However, not all users are aware that these portraits are only loosely based on textual and aesthetic sources, most often without any underlying paint on the bust to indicate the skin color of the original (and even paint on busts was but an idealized artistic choice). It is important for students and the public alike to recognize that even with the best available technology, no artist or scientist will be able to create a definitive, frozen portrait of a resident of the ancient Mediterranean. We will never know with 100% accuracy what someone actually looked like in the ancient world, no matter how realistic the generated portrait may appear.

When machine learning and the use of computers is emphasized in artistic research, in reconstructions, or in beauty contests, viewers often take the results to be scientific, objective, and unbiased. When these findings and reconstructions then become popularized and are disseminated widely, they are nearly universally accepted as historical reality. Although AI provides the tools to analyze large swaths of data and to conjure lifelike images, more knowledge of the bias implicit in AI and machine learning at all phases from programming to design and implementation must be emphasized and accompany any attempts to remake the past. Like the white statues of antiquity, inaccurate and idealized images of the past and the present have a way of becoming so firmly embedded in our society that we have difficulty imagining them otherwise.